Feb 13, 2026

What LLMs Actually Pull From Your Website (And What They Ignore)

Averi Academy

Averi Team

8 minutes

In This Article

LLMs read raw HTML and structured data but miss JavaScript-rendered content and code comments—use server-side rendering, Schema.org, and semantic HTML.

Updated:

Feb 13, 2026

Don’t Feed the Algorithm

The algorithm never sleeps, but you don’t have to feed it — Join our weekly newsletter for real insights on AI, human creativity & marketing execution.

Large Language Models (LLMs) don’t process websites like humans do. Instead of viewing fully rendered pages, they extract data from raw HTML - ignoring most JavaScript-executed content. This means crucial elements like product descriptions or pricing, if loaded via JavaScript, are invisible to these systems. Structured data, semantic HTML, and concise, well-organized content are what LLMs prioritize. However, they also scrape everything in your source code, including developer comments, which could unintentionally expose sensitive information. To make your site AI-friendly, focus on server-side rendering, structured data (e.g., Schema.org), and clear, accessible HTML. Avoid relying on JavaScript for critical content and clean up unnecessary code comments. By aligning your site with how LLMs operate, you can improve your visibility and credibility in AI-driven tools.

SEO for LLMs: It's just SEO (mostly)

How LLMs Process Website Content

What LLMs See vs Ignore on Websites: Key Optimization Statistics

When large language models (LLMs) crawl your website, they interact with raw HTML source code, not the fully rendered pages that your visitors see. This distinction is crucial because most AI crawlers only process the server's raw HTML response, which excludes content generated by JavaScript. For instance, during a 2025 audit of the eCommerce site Lulu and Georgia, it was discovered that product names like "Mara Candle Holder" were visible to users but absent from the Response HTML. Since these product names were injected via JavaScript, standard AI crawlers completely missed them [6].

The process begins with tokenizing raw HTML, stripping away formatting, and converting body text into embeddings. These embeddings are then mapped into a multidimensional space for contextual analysis. Long pages are divided into smaller chunks, which means that critical information buried in lengthy text blocks may lose context during this slicing process [5]. Now, let’s explore what LLMs prioritize and what they tend to ignore when analyzing website content.

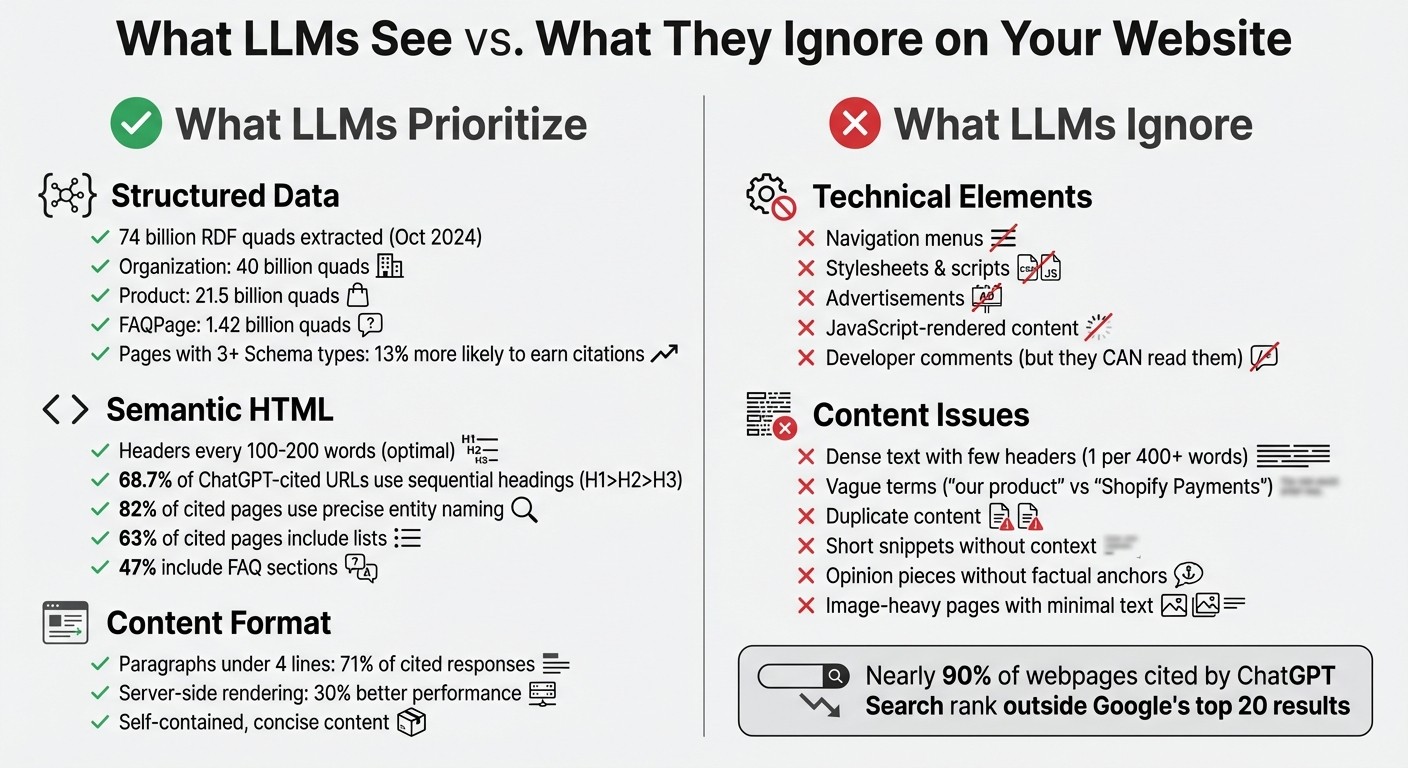

What LLMs Look For

LLMs value content that is structured, explicit, and easy to interpret. The most useful elements provide clear and factual data, with structured data formats - like Schema.org markup in JSON-LD, Microdata, or RDFa - ranked highest. In October 2024, the Web Data Commons extracted 74 billion RDF quads (structured statements) from the web, with "Organization" (40 billion quads), "Product" (21.5 billion quads), and "FAQPage" (1.42 billion quads) leading the categories [1].

Structured data isn’t directly fed into models. Instead, it undergoes "Data-to-Text" processing, where subject-predicate-object relationships (triples) are transformed into natural language sentences. As Hanns Kronenberg aptly puts it:

"Structured data is the AI's reality check" [1].

Using Schema.org to mark up details like pricing, availability, and brand names creates explicit relationships, enabling models to synthesize accurate answers.

Beyond structured data, semantic HTML elements play a key role in organizing information. Headers (H1, H2, H3) act as natural dividers, helping LLMs understand the layout and flow of content. Pages frequently cited by LLMs average one header every 100 to 200 words, while pages with fewer headers - one every 400 or more words - are often overlooked [4]. Additionally, 82% of frequently cited pages use precise entity naming, such as "Shopify Payments" instead of vague terms like "our product", anchoring facts to specific brands [4].

Lists and tables are highly favored for their ability to present information in concise, organized formats. 63% of cited pages include lists, and models often convert original content into list form when generating structured answers - whether or not the source content was originally formatted that way [4]. FAQ sections, which mirror the question-answer structure LLMs are trained on, appear on 47% of frequently cited pages [4].

LLMs also prefer short, self-contained paragraphs that retain meaning even when divided into smaller chunks. 71% of cited factual responses come from pages with paragraphs under four lines [4]. Clear, direct language - like "lasts 1–2 weeks" instead of vague phrases - helps models anchor meaning more effectively [5].

What LLMs Ignore

LLMs filter out what they deem low-value or irrelevant content. Navigation menus, stylesheets, scripts, and advertisements are typically stripped during the crawling process. For example, the C4 corpus (Colossal Clean Crawled Corpus) removes markup, JSON-LD, and boilerplate to focus on naturally readable web text [1].

Dense, unstructured text is another common casualty. A 1,500-word essay with only one H1 header can make it difficult for models to extract specific facts. Pages designed as storytelling assets, rather than scannable reference material, are rarely cited. Ana Fernandez from Previsible explains:

"Pages that win are built like reference material, not campaign assets" [4].

JavaScript-rendered content presents another major blind spot. While Googlebot processes JavaScript-executed content, most AI bots - like ChatGPT, Claude, and Perplexity - only see the raw server response. If essential elements like H1 tags, product descriptions, or pricing rely on JavaScript, these bots will miss them entirely [3].

Redundant or duplicate content is also filtered out through de-duplication processes. If the same paragraph appears across multiple pages, it may only be processed once. Similarly, short snippets - like single-sentence product descriptions - are often discarded for lacking sufficient context.

Finally, opinion pieces without factual anchors and image-heavy pages with minimal supporting text are deprioritized. LLMs are tuned to extract meaningful, structured information, making pages with limited substantive text less useful in their analysis.

How to Optimize Your Website for LLMs

Now that you know what LLMs prioritize and overlook, it’s time to fine-tune your website for AI-driven visibility. The adjustments here align closely with accessibility standards and clean web development practices. These steps will help ensure your content is accessible, well-structured, and easy for AI systems to process.

Use Clean and Semantic HTML

Start by delivering clear, server-side rendered content. Many AI bots only read raw HTML and ignore JavaScript-executed elements. For example, product names, headlines, and pricing injected via JavaScript often remain undetected by these systems [6]. The solution? Prioritize server-side rendering (SSR) or static-site generation (SSG) for critical acquisition pages. Websites serving complete server-side HTML typically see 30% better performance compared to those relying on client-side rendering [6].

Next, ensure your heading hierarchy is logical and sequential. Use only one H1 per page, and follow a proper structure (H1 > H2 > H3) without skipping levels. Sequential headings improve AI comprehension, making pages 2.8 times more likely to earn citations from AI systems [7]. For instance, 68.7% of URLs cited by ChatGPT adhere to this structure, compared to just 23.9% of Google's top results [7]. Skipping from H2 to H4 disrupts the "chunking" process LLMs use to understand relationships between sections [2].

Additionally, remove unnecessary code comments to avoid unintended disclosures. Unlike traditional search engines, AI bots can read developer notes. Sam Torres, Chief Digital Officer at Pipedrive, cautions:

"LLMs actually see the content of comments in your code … there might be rants, Easter eggs, confidential information" [3].

Once your HTML is clean and visible, structured data can further enhance how LLMs process your site.

Add Structured Data

Structured data acts as a clarity booster for AI systems. Implementing Schema.org markup - especially in JSON-LD format - provides clear relationships that help models synthesize accurate answers. Pages using three or more Schema types are 13% more likely to earn AI citations [7]. Focus on high-value types like FAQPage, Product, HowTo, and Article. These formats align with the question-and-answer structure LLMs are trained on, making your content easier to extract and reference. Hanns Kronenberg, an AI SEO specialist, sums it up well:

"Those who only provide text, provide material. Those who provide structured data, provide answers" [1].

To further solidify your presence, use unique identifiers like @id in your Schema.org markup. This anchors your brand, products, and organization in the model's knowledge base, helping LLMs verify facts both during pre-training and real-time queries [1].

With strong structural elements in place, it’s equally important to avoid common mistakes that can hinder your content’s visibility.

Avoid Common Pitfalls

Even with clean HTML and structured data, certain errors can reduce AI readability. Here’s what to watch out for:

Use specific, descriptive link text. Avoid vague anchors like "Click here" or "Learn More." LLMs rely on link text for understanding context, and repetitive or unclear anchors can create "knowledge conflicts", causing the model to disregard later links [2]. Instead, use text that clearly describes the link's destination.

Eliminate duplicate content. If the same paragraph appears on multiple pages, LLMs may only process it once. Similarly, overly short pages - like single-sentence product descriptions - are often ignored for lacking context. Aim for self-contained paragraphs under four lines that retain meaning even when broken into smaller chunks [4].

Ensure key information is in raw HTML. Use the "View Page Source" feature in Chrome to audit your site. If critical details like pricing or descriptions don’t appear there, they’re likely invisible to most LLMs [3]. You can also add an

llms.txtfile at your site root to direct AI systems to canonical resources, APIs, and key guides - similar to howrobots.txtworks for search engines [8].

Data Cleaning Processes Used by LLMs

When examining how LLMs clean data, it becomes clear why certain elements of a website are overlooked. These systems don’t indiscriminately gather everything - they use advanced filters to retain only high-quality, relevant content for training.

Text Extraction and De-duplication

LLMs depend on tools like Trafilatura to extract meaningful content while ignoring unnecessary elements. These tools leverage semantic HTML tags (e.g., <article>, <main>) to differentiate core content from peripheral elements like ads, sidebars, and navigation menus [2]. J. Hogue, Director of Experience Design at Oomph Inc, explains:

"Semantic elements (<article>, <nav>, <main>, <h1>, etc.) add context and suggest relative ranking weight. They make content boundaries explicit, which helps retrieval systems isolate your content from less important elements like ad slots" [2].

Once extracted, the content is divided into logical units, such as paragraphs grouped by headings, and converted into embeddings. These embeddings are numerical vectors that represent the semantic meaning of the text, allowing the system to focus on concepts rather than just keywords [5][2].

An important part of this process involves filtering out redundant link text. For instance, if several URLs use identical anchor text like "Learn More", the model might prioritize the first link while ignoring others. This impacts how content is vectorized and anchored [2].

Following extraction, LLMs refine content further through specific heuristics.

Heuristics and Filters

Once content is divided and vectorized, LLMs apply rigorous heuristics to ensure only the most useful data is retained. A key element in this process is Response HTML, which acts as a primary filter. Most AI systems process only the raw server response and don’t execute JavaScript, meaning any content rendered on the client side is excluded [3]. Sam Torres, Chief Digital Officer at Pipedrive, explains:

"They see the response HTML … If you do a view page source in Chrome … All of that is what an LLM typically sees" [3].

Thin or low-quality pages are also discarded because they lack sufficient data for effective vectorization. Similarly, vague phrases like "Click here" are deprioritized due to their lack of meaningful context. Instead, the system favors content with clear metadata, structured headings, and machine-readable formats that meet specific standards [2].

This meticulous filtering process ensures that only the most relevant and structured data contributes to the training of LLMs.

Conclusion: Aligning Your Website with LLM Capabilities

The same principles that once made your site friendly to search engines - clean HTML, structured data, and logical hierarchies - are now essential for AI systems to extract and cite your content. Since large language models (LLMs) prioritize Response HTML over content rendered by JavaScript, it’s critical to ensure that your most important copy is present in the raw server response, not just after client-side execution [3][6].

Start by auditing your site's raw content. Use tools to compare the Response HTML with the fully rendered version of your page. If key elements like H1 tags, product descriptions, or internal links are only visible after rendering, move them server-side. This adjustment can significantly improve your visibility across AI platforms.

Shift your focus from keyword-heavy content to entity-based optimization. LLMs store information about entities - such as your brand, products, or team - and their attributes. Incorporate Schema.org JSON-LD to provide machine-readable data like prices, dates, and authorship. Adding a concise TL;DR summary (no more than 50 words) at the top of your key pages, along with structured FAQs containing 6–10 question-and-answer pairs, makes your content easier for AI to process and cite [1][8].

Track your performance metrics, as brand search volume now correlates with how often your content is cited by AI systems [9]. Measure your share of voice - how frequently your brand appears in AI-generated responses compared to competitors - and monitor citation frequency on platforms like ChatGPT, Perplexity, and Google AI Overviews. Tools like Profound (starting at $400+ per month) and Peec AI (ranging from $100 to $550 per month) can help you analyze these metrics [9].

Interestingly, nearly 90% of webpages cited by ChatGPT Search rank outside Google’s top 20 results [8]. By optimizing your site with clean HTML, structured data, and a focus on entities, you can ensure your brand is not only discoverable but also cited and trusted in this evolving AI-driven marketing landscape.

FAQs

How can I check what an LLM can actually see on my pages?

Large Language Models (LLMs) primarily rely on visible HTML content, structured data, and metadata to interpret web pages. They typically overlook content loaded dynamically through JavaScript unless they are specifically designed to handle it. Elements like page titles, the first 500–1000 characters of text, and structured answer blocks receive the most attention. To improve visibility, prioritize fast HTML rendering, use clear and accurate structured data, and ensure content is crawlable by employing semantic HTML.

Which site content should be server-rendered instead of JavaScript-rendered?

Critical information that AI systems and crawlers rely on should always be server-rendered. This includes key elements like core page content, metadata, structured data (such as schema markup), and other essential text. Relying solely on JavaScript to load content can create issues, as large language models (LLMs) and crawlers might not fully process it. This could result in missed signals and decreased visibility in AI-powered search and automation systems.

What Schema.org markup should I add first for better AI citations?

Start by implementing Schema.org structured data on your website. This transforms your site's information into clear, machine-readable statements through Data-to-Text processes. By doing so, large language models (LLMs) can better incorporate your content into their knowledge base, enhancing the likelihood that AI systems will reference and cite your website accurately.